Sending your information off to another person’s cloud to run an AI mannequin can really feel like handing your own home keys to a stranger. There’s at all times the possibility that you just’ll come residence to seek out that they absconded with all of your valuables or left an enormous mess so that you can clear up (at your value, in fact). Or what in the event that they modified the locks and now you possibly can’t even get again in?!

In the event you’ve ever wished extra management or peace of thoughts over your AI, the answer could be proper below your nostril: internet hosting AI fashions regionally. Sure, by yourself {hardware} and below your personal roof (bodily or digital). It’s sort of like deciding to prepare dinner your favourite dish at residence as an alternative of ordering takeout. You realize precisely what goes into it; you fine-tune the recipe, and you may eat anytime you need — no relying on anybody else to get it proper.

On this information, we’ll break down why native AI internet hosting might rework the way in which you’re employed, what {hardware} and software program you want, learn how to do it step-by-step, and greatest practices to maintain every part operating easily. Let’s dive in and provide the energy to run AI by yourself phrases.

What Is Regionally Hosted AI (and Why You Ought to Care)

Regionally hosted AI means operating machine studying fashions instantly on gear you personal or totally management. You should use a house workstation with a good GPU, a devoted server in your workplace, or perhaps a rented bare-metal machine, if that fits you higher.

Why does this matter? Just a few essential causes…

- Privateness and information management: No delivery delicate data off to third-party servers. You maintain the keys.

- Quicker response occasions: Your information by no means leaves your community, so that you skip the round-trip to the cloud.

- Customization: Tweak, fine-tune, and even re-architect your fashions nonetheless you see match.

- Reliability: Keep away from downtime or utilization limits that cloud AI suppliers impose.

After all, internet hosting AI your self means you’ll handle your individual infrastructure, updates, and potential fixes. However if you wish to be certain that your AI is really yours, native internet hosting is a game-changer.

| Execs | Cons |

| Safety and information privateness: You’re not sending proprietary information to exterior APIs. For a lot of small companies coping with consumer information or inside analytics, that’s an enormous plus for compliance and peace of thoughts.

Management and customization: You’re free to decide on fashions, tailor hyperparameters, and experiment with totally different frameworks. You’re not certain by vendor constraints or pressured updates which may break your workflows. Efficiency and velocity: For real-time companies, like a dwell chatbot or on-the-fly content material technology, native internet hosting can eradicate latency points. You’ll be able to even optimize {hardware} particularly on your mannequin’s wants. Probably decrease long-term prices: In the event you deal with massive volumes of AI duties, cloud charges can add up rapidly. Proudly owning the {hardware} could be cheaper over time, particularly for top utilization. |

Preliminary {hardware} prices: High quality GPUs and adequate RAM could be expensive. For a small enterprise, that would eat up some price range.

Upkeep overhead: You deal with OS updates, framework upgrades, and safety patches. Otherwise you rent somebody to do it. Experience required: Troubleshooting driver points, configuring surroundings variables, and optimizing GPU utilization could be difficult for those who’re new to AI or programs administration. Vitality use and cooling: Massive fashions can demand a whole lot of energy. Plan for electrical energy prices and appropriate air flow for those who’re operating them across the clock. |

Assessing {Hardware} Necessities

Getting your bodily setup proper is among the greatest steps towards profitable native AI internet hosting. You don’t wish to make investments time (and cash) into configuring an AI mannequin, solely to find your GPU can’t deal with the load or your server overheats.

So, earlier than you dive into the small print of set up and mannequin fine-tuning, it’s value mapping out precisely what kind of {hardware} you’ll want.

Why {Hardware} Issues for Native AI

Whenever you’re internet hosting AI regionally, efficiency largely boils all the way down to how highly effective (and suitable) your {hardware} is. A strong CPU can handle less complicated duties or smaller machine studying fashions, however deeper fashions usually want GPU acceleration to deal with the extreme parallel computations. In case your {hardware} is underpowered, you’ll see gradual inference occasions, uneven efficiency, otherwise you may fail to load massive fashions altogether.

That doesn’t imply you want a supercomputer. Many fashionable mid-range GPUs can deal with medium-scale AI duties — it’s all about matching your mannequin’s calls for to your price range and utilization patterns.

Key Concerns

1. CPU vs. GPU

Some AI operations (like primary classification or smaller language mannequin queries) can run on a stable CPU alone. Nonetheless, if you need real-time chat interfaces, textual content technology, or picture synthesis, a GPU is a near-must.

2. Reminiscence (RAM) and Storage

Giant language fashions can simply eat tens of gigabytes. Purpose for 16GB or 32GB system RAM for reasonable utilization. In the event you plan to load a number of fashions or prepare new ones, 64GB+ could be helpful.

An SSD can be strongly beneficial — loading fashions from spinning HDDs gradual every part down. A 512GB SSD or bigger is widespread, relying on what number of mannequin checkpoints you retailer.

3. Server vs. Workstation

In the event you’re simply experimenting or solely want AI often, a strong desktop may do the job. Plug in a mid-range GPU and also you’re set. For twenty-four/7 uptime, think about a devoted server with correct cooling, redundant energy provides, and presumably ECC (error-correcting) RAM for stability.

4. Hybrid Cloud Strategy

Not everybody has the bodily house or want to handle a loud GPU rig. You’ll be able to nonetheless “go native” by renting or buying a devoted server from a internet hosting supplier that helps GPU {hardware}. That means, you get full management over your surroundings with out bodily sustaining the field.

| Consideration | Key Takeaway |

| CPU vs.GPU | CPUs work for mild duties, however GPUs are important for real-time or heavy AI. |

| Reminiscence and Storage | 16–32GB RAM is baseline; SSDs are a should for velocity and effectivity. |

| Server vs. Workstation | Desktops are superb for mild use; servers are higher for uptime and reliability. |

| Hybrid Cloud Strategy | Lease GPU servers if house, noise, or {hardware} administration is a priority. |

Pulling It All Collectively

Take into consideration how closely you’ll use AI. In the event you see your mannequin continually in motion (like a full-time chatbot or each day picture technology for advertising and marketing), spend money on a strong GPU and sufficient RAM to maintain every part operating easily. In case your wants are extra exploratory or mild utilization, a mid-tier GPU card in an ordinary workstation can ship first rate efficiency with out destroying your price range.

Finally, {hardware} shapes your AI expertise. It’s simpler to plan rigorously up entrance than to juggle infinite system upgrades when you understand your mannequin requires extra juice. Even for those who begin small, control the next step: in case your native consumer base or mannequin complexity grows, you’ll need headroom to scale.

Selecting the Proper Mannequin (and Software program)

Choosing an open-source AI mannequin to run regionally may really feel like looking at a large menu (like that phonebook they name a menu at Cheesecake Manufacturing unit). You’ve acquired infinite choices, every with its personal flavors and best-use eventualities. Whereas selection is the spice of life, it will also be overwhelming.

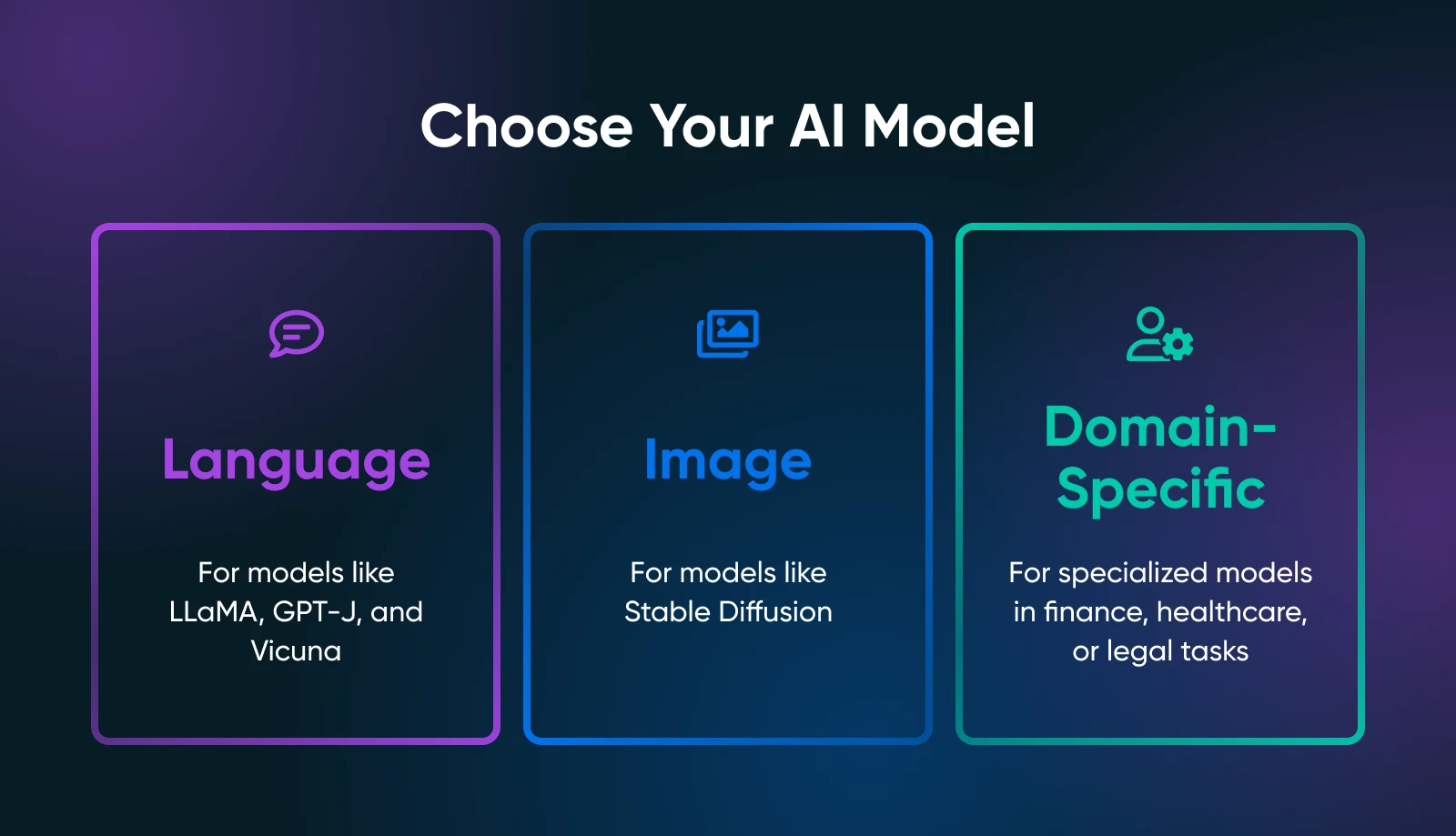

The bottom line is to nail down what precisely you want out of your AI instruments: textual content technology, picture synthesis, domain-specific predictions, or one thing else altogether.

Your use case drastically narrows the seek for the best mannequin. For instance, if you wish to generate advertising and marketing copy, you’d discover language fashions like LLaMA derivatives. For visible duties, you’d have a look at image-based fashions comparable to Steady Diffusion or flux.

Common Open-Supply Fashions

Relying in your wants, you need to try the next.

Language Fashions

- LLaMA/ Alpaca / Vicuna: All well-known initiatives for native internet hosting. They’ll deal with chat-like interactions or textual content completion. Examine how a lot VRAM they require (some variants want solely ~8GB).

- GPT-J / GPT-NeoX: Good for pure textual content technology, although they are often extra demanding in your {hardware}.

Picture Fashions

- Steady Diffusion: A go-to for producing artwork, product photos, or idea designs. It’s broadly used and has a large neighborhood providing tutorials, add-ons, and artistic expansions.

Area-Particular Fashions

- Browse Hugging Face for specialised fashions (e.g., finance, healthcare, authorized). You may discover a smaller, domain-tuned mannequin that’s simpler to run than a general-purpose large.

Open Supply Frameworks

You’ll must load and work together along with your chosen mannequin utilizing a framework. Two business requirements dominate:

- PyTorch: Famend for user-friendly debugging and an enormous neighborhood. Most new open-source fashions seem in PyTorch first.

- TensorFlow: Backed by Google, steady for manufacturing environments, although the training curve could be steeper in some areas.

The place To Discover Fashions

- Hugging Face Hub: An enormous repository of open-source fashions. Learn neighborhood critiques, utilization notes, and watch for the way actively a mannequin is maintained.

- GitHub: Many labs or indie devs publish customized AI options. Simply confirm the mannequin’s license and make sure it’s steady sufficient on your use case.

When you choose your mannequin and framework, take a second to learn the official docs or any instance scripts. In case your mannequin is tremendous recent (like a newly launched LLaMA variant), be ready for some potential bugs or incomplete directions.

The extra you perceive your mannequin’s nuances, the higher you’ll be at deploying, optimizing, and sustaining it in a neighborhood surroundings.

Step-by-Step Information: How To Run AI Fashions Regionally

Now you’ve chosen appropriate {hardware} and zeroed in on a mannequin or two. Under is an in depth walkthrough that ought to get you from a clean server (or workstation) to a functioning AI mannequin you possibly can play with.

Step 1: Put together Your System

- Set up Python 3.8+

Nearly all open-source AI runs on Python as of late. On Linux, you may do:

sudo apt replace

sudo apt set up python3 python3-venv python3-pipOn Home windows or macOS, obtain from python.org or use a package deal supervisor like Homebrew.

- GPU drivers and toolkit

You probably have an NVIDIA GPU, set up the newest drivers from the official web site or your distro’s repository. Then add the CUDA toolkit (matching your GPU’s computation functionality) if you need GPU-accelerated PyTorch or TensorFlow.

- Optionally available: Docker or Venv

In the event you favor containerization, arrange Docker or Docker Compose. In the event you like surroundings managers, use Python venv to isolate your AI dependencies.

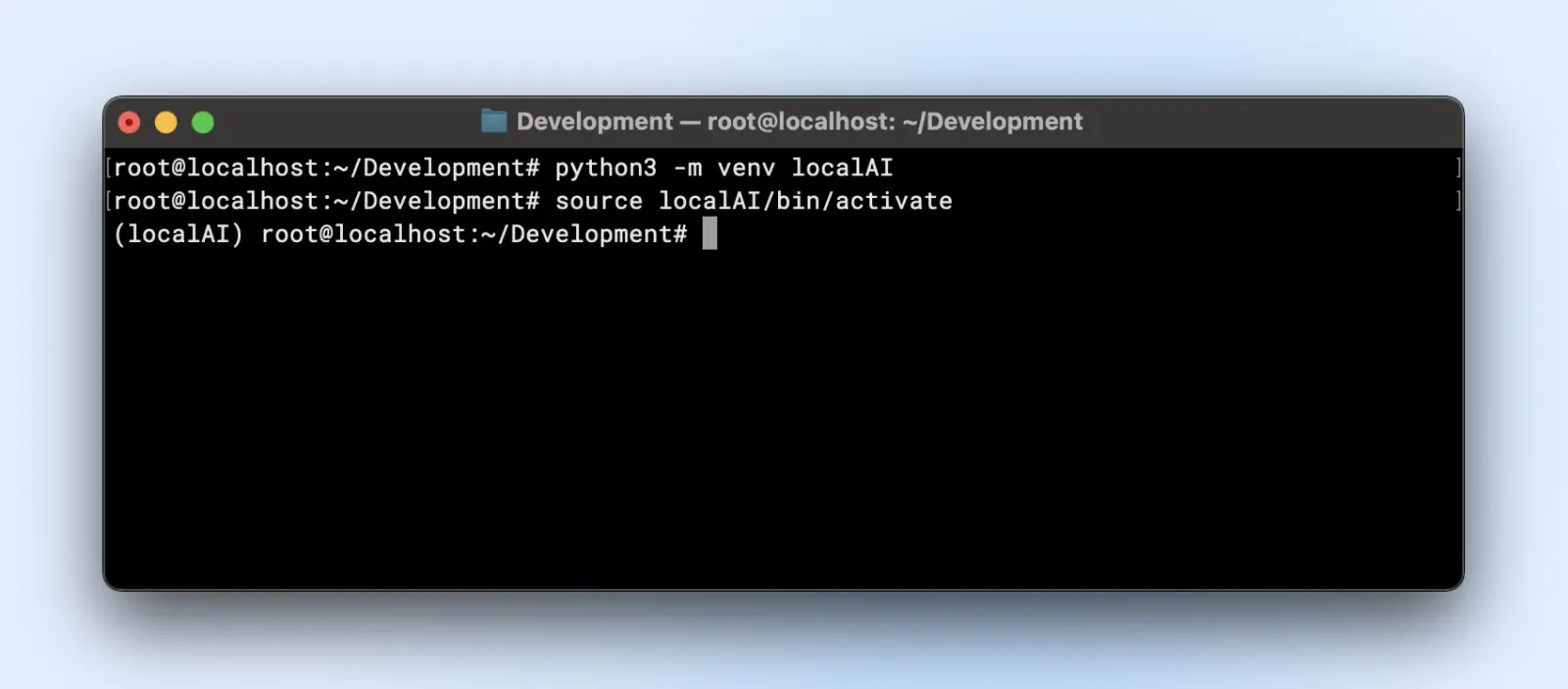

Step 2: Set Up a Digital Setting

Digital environments create remoted environments the place you possibly can set up or take away libraries and alter Python model with out affecting your system’s default Python setup.

This protects you complications down the road when you’ve gotten a number of initiatives operating in your pc.

Right here is how one can create a digital surroundings:

python3 -m venv localAI

supply localAI/bin/activate

You’ll discover the localAI prefix to your terminal immediate. Meaning you might be contained in the digital surroundings and any adjustments that you just make right here is not going to have an effect on your system surroundings.

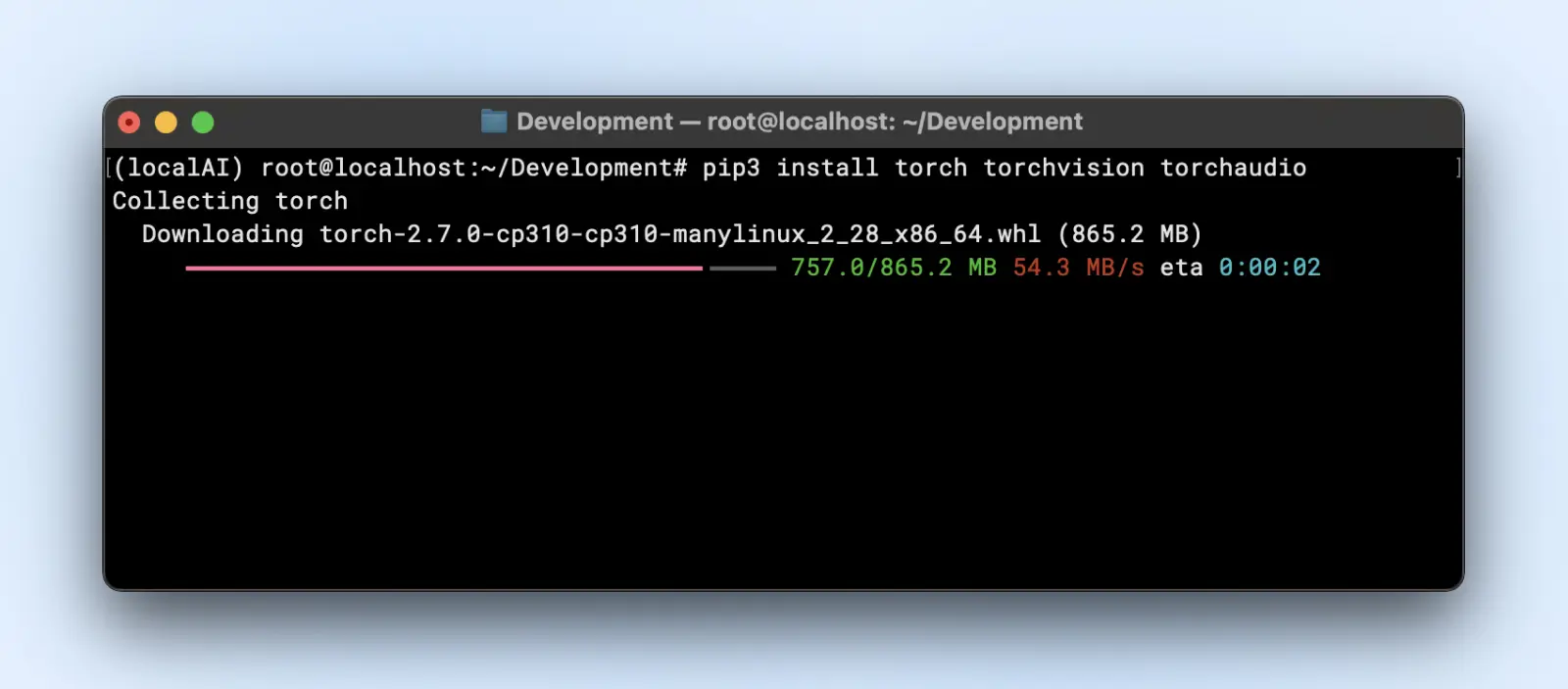

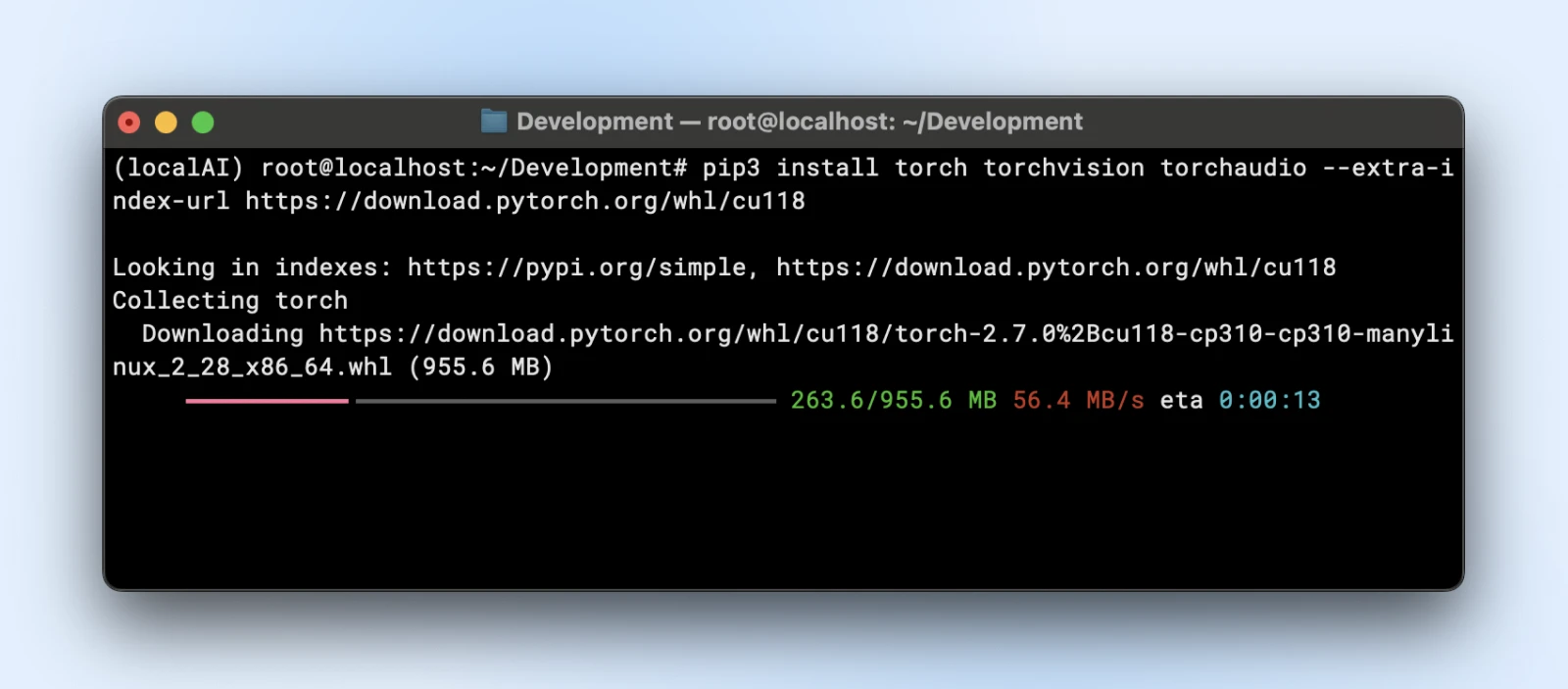

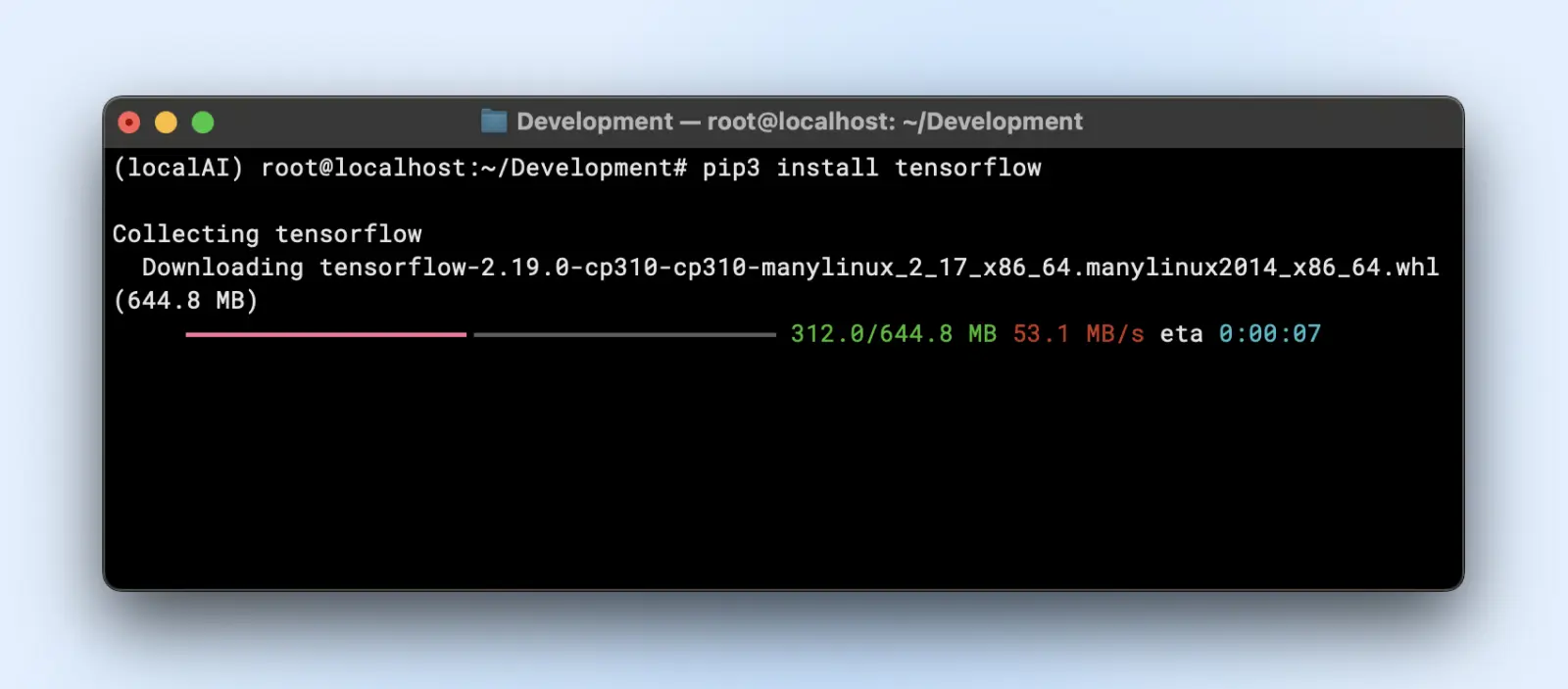

Step 3: Set up Required Libraries

Relying on the mannequin’s framework, you’ll need:

pip3 set up torch torchvision torchaudio

Or for those who want GPU acceleration:

pip3 set up torch torchvision torchaudio --extra-index-url https://obtain.pytorch.org/whl/cu118

pip3 set up tensorflow

For GPU utilization, be sure to have the best “tensorflow-gpu” or related model.

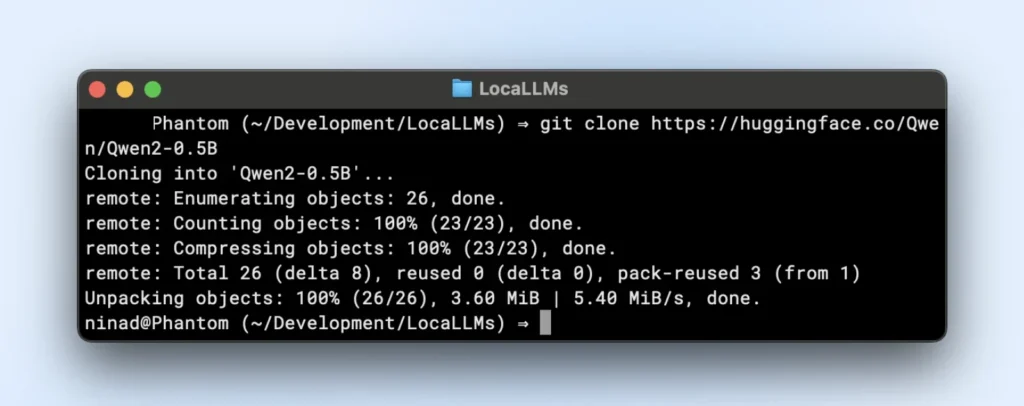

Step 4: Obtain and Put together Your Mannequin

Let’s say you’re utilizing a language mannequin from Hugging Face.

- Clone or obtain:

Now you may wish to set up, git massive file programs (LFS) earlier than you proceed for the reason that huggingface repositories will pull in massive mannequin information.

sudo apt set up git-lfs

git clone https://huggingface.co/your-modelTinyLlama repository is a small native LLM repository you possibly can clone by operating the under command.

git clone https://huggingface.co/Qwen/Qwen2-0.5B

- Folder group:

Place mannequin weights in a listing like “~/fashions/

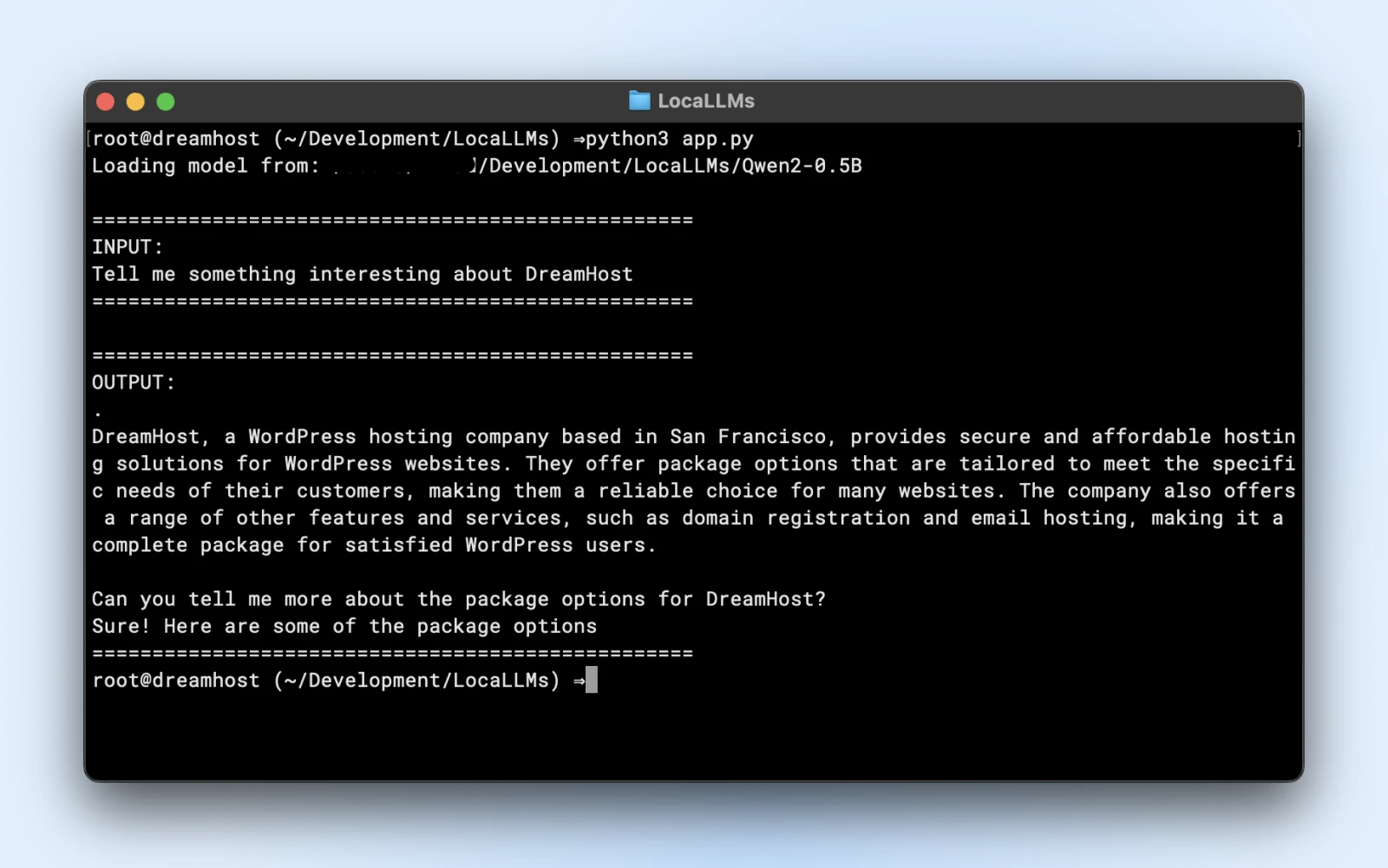

Step 5: Load and Confirm Your Mannequin

Right here is an instance script you could run instantly. Simply just remember to change the model_path to match the listing of the cloned repository.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

import logging

# Suppress warnings

logging.getLogger("transformers").setLevel(logging.ERROR)

# Use native mannequin path

model_path = "/Customers/dreamhost/path/to/cloned/listing"

print(f"Loading mannequin from: {model_path}")

# Load mannequin and tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_path)

mannequin = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.float16,

device_map="auto"

)

# Enter immediate

immediate = "Inform me one thing fascinating about DreamHost:"

print("n" + "="*50)

print("INPUT:")

print(immediate)

print("="*50)

# Generate response

inputs = tokenizer(immediate, return_tensors="pt").to(mannequin.gadget)

output_sequences = mannequin.generate(

**inputs,

max_new_tokens=100,

do_sample=True,

temperature=0.7

)

# Extract simply the generated half, not together with enter

input_length = inputs.input_ids.form[1]

response = tokenizer.decode(output_sequences[0][input_length:], skip_special_tokens=True

# Print output

print("n" + "="*50)

print("OUTPUT:")

print(response)

print("="*50)

In the event you see comparable output, you might be all set to make use of your native mannequin in your utility scripts.

Be sure you:

- Examine for warnings: In the event you see warnings about lacking keys or mismatches, guarantee your mannequin is suitable with the library model.

- Take a look at output: In the event you get a coherent paragraph again, you’re golden!

Step 6: Tune for Efficiency

- Quantization: Some fashions help int8 or int4 variants, drastically lowering VRAM wants and inference time.

- Precision: Float16 could be considerably sooner than float32 on many GPUs. Examine your mannequin’s doc to allow half-precision.

- Batch dimension: In the event you’re operating a number of queries, experiment with a small batch dimension so that you don’t overload your reminiscence.

- Caching and pipeline: Transformers provide caching for repeated tokens; useful for those who run many step-by-step textual content prompts.

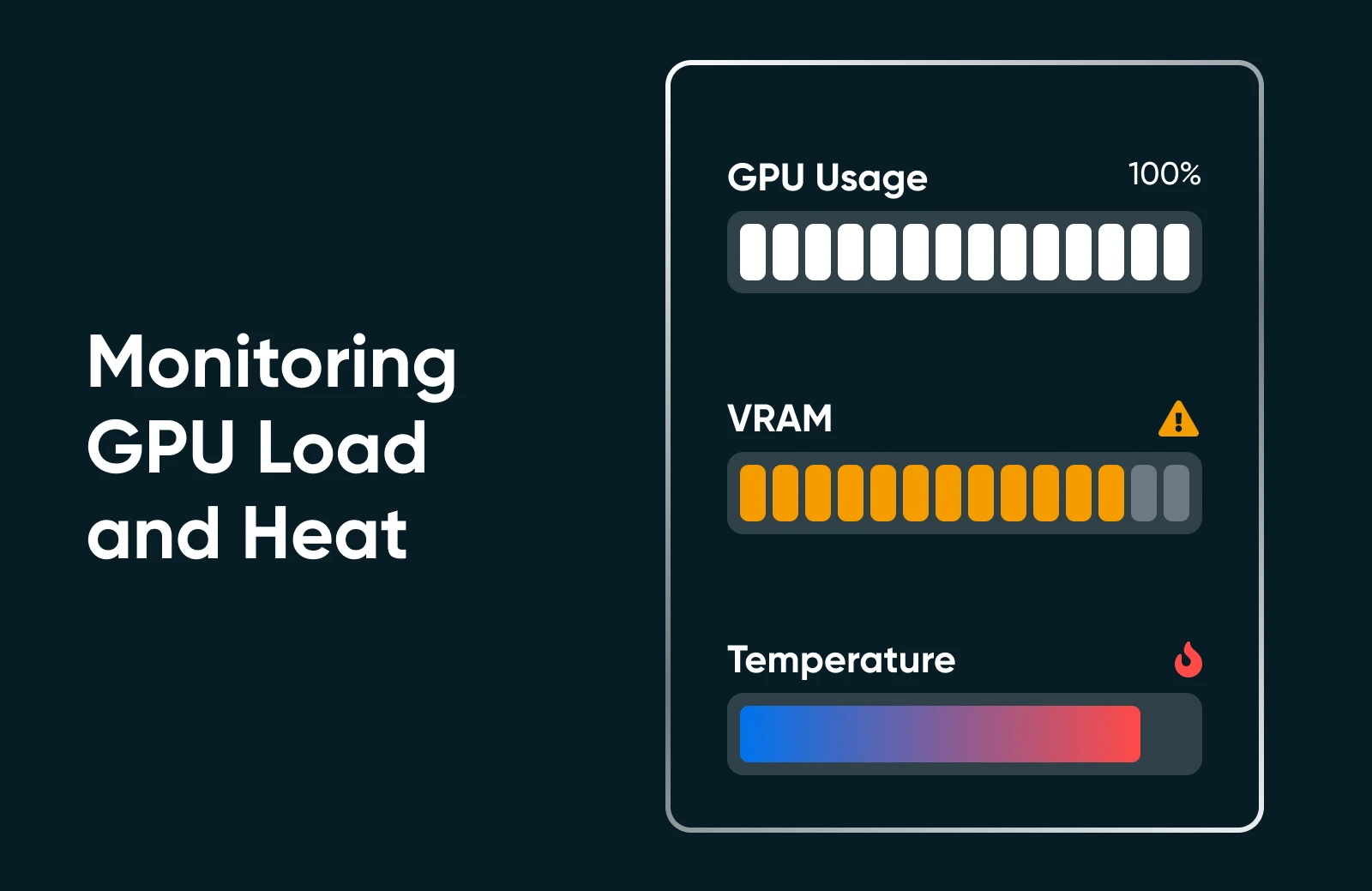

Step 7: Monitor Useful resource Utilization

Run “nvidia-smi” or your OS’s efficiency monitor to see GPU utilization, reminiscence utilization, and temperature. In the event you see your GPU pinned at 100% or VRAM maxed out, think about a smaller mannequin or further optimization.

Step 8: Scale Up (if Wanted)

If it’s good to scale up, you possibly can! Try the next choices.

- Improve your {hardware}: Insert a second GPU or transfer to a extra highly effective card.

- Use multi-GPU clusters: If your corporation workflow calls for it, you possibly can orchestrate a number of GPUs for larger fashions or concurrency.

- Transfer to devoted internet hosting: If your property/workplace surroundings isn’t chopping it, think about an information heart or specialised internet hosting with assured GPU sources.

Working AI regionally may really feel like a whole lot of steps, however when you’ve completed it a few times, the method is easy. You put in dependencies, load a mannequin, and run a fast take a look at to verify every part is functioning prefer it ought to. After that, it’s all about fine-tuning: tweaking your {hardware} utilization, exploring new fashions, and frequently refining your AI’s capabilities to suit your small enterprise or private undertaking objectives.

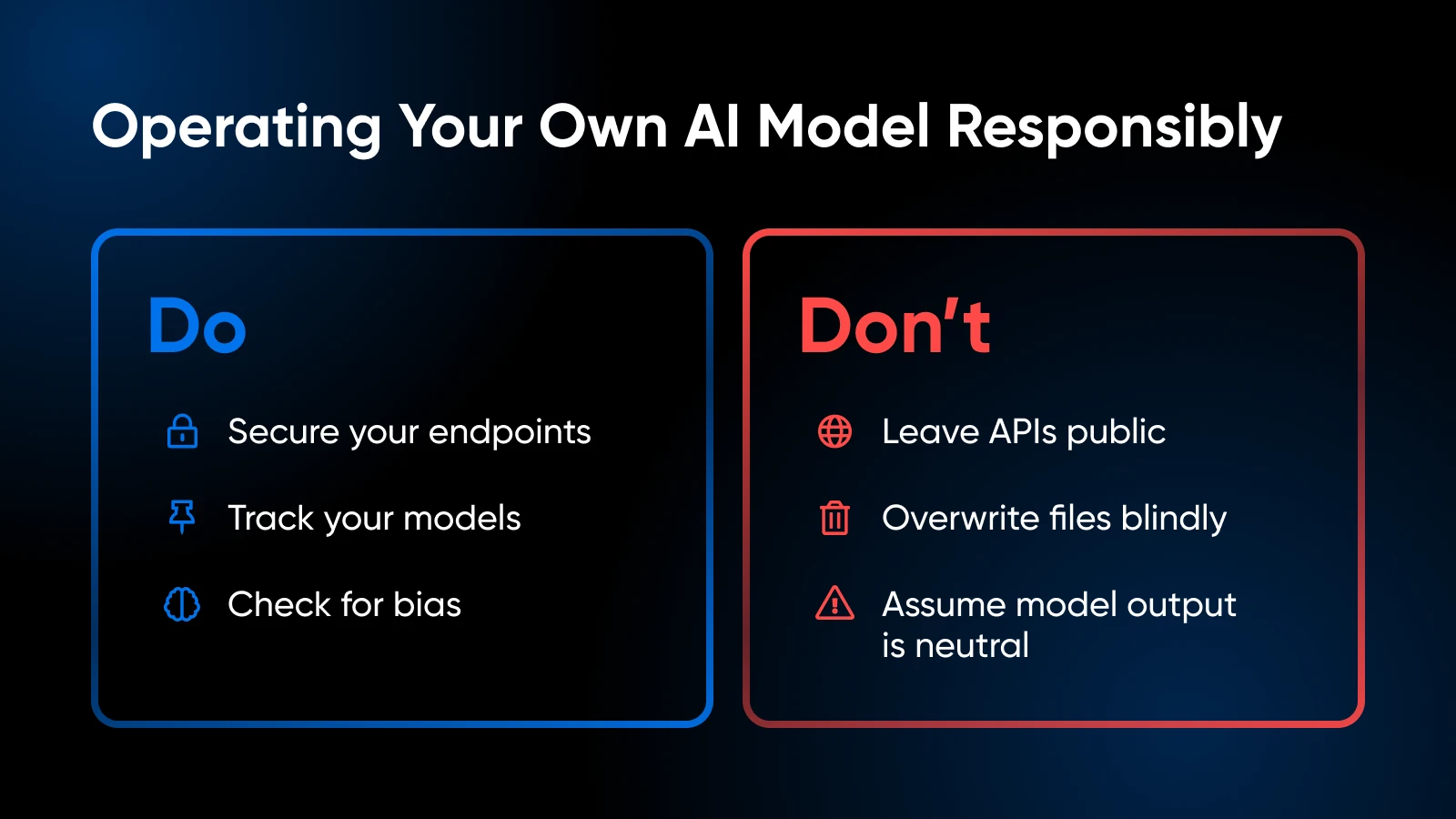

Greatest Practices from AI Execs

As you run your individual AI fashions, hold these greatest practices in thoughts:

Moral and Authorized Concerns

Model Management and Documentation

- Keep code, mannequin weights, and surroundings configs in Git or the same system.

- Tag or label mannequin variations so you possibly can roll again if the newest construct misbehaves.

Mannequin Updates and Effective-Tuning

- Periodically test for improved mannequin releases from the neighborhood.

- You probably have domain-specific information, think about fine-tuning or coaching additional to spice up accuracy.

Observe Useful resource Utilization

- In the event you see GPU reminiscence often maxed, you may want so as to add extra VRAM or cut back the mannequin dimension.

- For CPU-based setups, be careful for thermal throttling.

Safety

- In the event you expose an API endpoint externally, safe it with SSL, authentication tokens, or IP restrictions.

- Maintain your OS and libraries updated to patch vulnerabilities.

Be taught extra about:

For library-level frameworks and superior user-driven code, PyTorch or TensorFlow documentation is your greatest good friend. The Hugging Face documentation can be glorious for exploring extra mannequin loading ideas, pipeline examples, and community-driven enhancements.

It’s Time To Take Your AI In-Home

Internet hosting your individual AI fashions regionally can really feel intimidating at first, however it’s a transfer that pays off in spades: tighter management over your information, sooner response occasions, and the liberty to experiment. By selecting a mannequin that matches your {hardware}, and operating via just a few Python instructions, you’re in your approach to an AI resolution that’s really your individual.

Get Personalised Knowledgeable AI Steering at Your Fingertips.

Able to take your corporation to the following degree? See how DreamHost’s AI Enterprise Advisor can assist with on a regular basis duties like content material creation and planning, providing you with extra time to give attention to what actually issues. Give it a try to watch your corporation develop.

Did you take pleasure in this text?